To simplify all of this I have created a Docker container that you can use.

vf finally the following specifies the output format: -vcodec libx264 -preset veryfast -pix_fmt yuv420p -strict -2 -y -f mpegts -r 25 udp://239.0.0.1:1234?pkt_size=1316 Below filter adds the timecode and some other information to the video using the drawtext filter. f lavfi -i anullsrc=r=48000:cl=stereo -c:a aac -shortestĪbove adds a silent audio track as some encoders requires an audio pid to be present in the mpeg-ts stream.

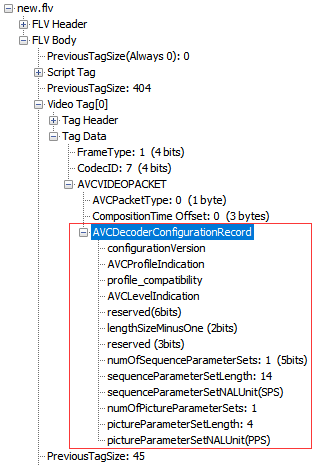

One thing to note is that the genpts flag only generate PTS when the value is missing and that is the reason to extract the h264/AVC bitstream first. This part of the second process reads the input from stdin and assumes that the framerate of the video is 25 frames per second and generates new PTS values. ffmpeg -framerate 25 -fflags +genpts -r 25 -re -i. The line above “extracts” the h264/AVC bitstream from the input file and once end-of-file is reached it will seek to the start of the file and repeat this until the program is stopped. ffmpeg -stream_loop -1 -i /mnt/Sunrise.mp4 -map 0:v -vcodec copy -bsf:v h264_mp4toannexb -f h264. The first process writes the output bitstream to stdout and the other process read the bitstream from stdin. One process to read and decode the input file and another process to generate the mpeg-ts stream. It can be in place to demystify a bit what this does. ffmpeg -stream_loop -1 -i /mnt/Sunrise.mp4 -map 0:v -vcodec copy -bsf:v h264_mp4toannexb -f h264 - | ffmpeg -framerate 25 -fflags +genpts -r 25 -re -i -f lavfi -i anullsrc=r=48000:cl=stereo -c:a aac -shortest -vf -vcodec libx264 -preset veryfast -pix_fmt yuv420p -strict -2 -y -f mpegts -r 25 udp://239.0.0.1:1234?pkt_size=1316 One use-case for this could be if you want to measure the distribution and streaming live latency added in the live ABR transcoder and video player. The following ffmpeg command line will produce an mpeg-ts multicast from a video file that is looping and with the local time burned into the video.

0 kommentar(er)

0 kommentar(er)